In recent years, the demand for large language models (LLMs) has skyrocketed. Organizations and individuals are keen to harness the power of these models for various applications, from customer support to content generation. Building a private LLM allows you to maintain control over your data and customize the model to meet specific needs. In this article, we’ll explore how to build a private LLM, discussing essential components, best practices, and key considerations.

Understanding Large Language Models

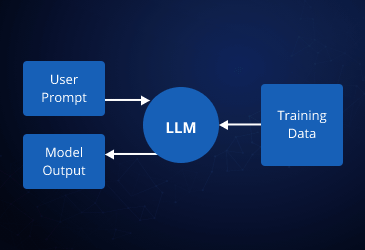

Before delving into how to build a private LLM, it’s important to understand what an LLM is. A large language model is a type of artificial intelligence that uses deep learning techniques to understand and generate human-like text. These models are trained on vast amounts of text data, allowing them to predict the next word in a sentence based on the context provided.

Step 1: Define Your Objectives

The first step in how to build a private LLM is to clearly define your objectives. What specific tasks do you want your LLM to perform? Common applications include:

- Customer support automation

- Content creation

- Data analysis

- Personalized recommendations

By identifying your goals, you can tailor your model to better serve your needs.

Step 2: Gather Data

Once you have your objectives in place, the next step is to gather data. The quality and quantity of the data used for training your LLM are crucial to its performance. Consider the following types of data:

- Text documents

- Web pages

- Books

- User-generated content

Make sure to focus on relevant and diverse data to ensure your model can generalize well to various scenarios. Additionally, you need to clean and preprocess your data to remove any noise or irrelevant information.

Step 3: Choose the Right Framework

To build a private LLM, you’ll need to select an appropriate machine learning framework. Several popular frameworks can facilitate the development process, such as TensorFlow and PyTorch. When choosing a framework, consider the following:

- Ease of use: Look for a framework with a user-friendly interface and extensive documentation.

- Community support: A large community can provide valuable resources and support during development.

- Compatibility: Ensure that the framework supports the tools and libraries you plan to use.

Step 4: Model Selection and Architecture

The architecture of your LLM is a critical factor in its performance. There are various architectures to choose from, including:

- Transformer models

- Recurrent Neural Networks (RNNs)

- Long Short-Term Memory (LSTM) networks

For most applications, transformer models are the preferred choice due to their efficiency and ability to handle long-range dependencies in text. When selecting a model, consider pre-trained options that can be fine-tuned on your specific dataset. This approach can save time and resources while improving your model’s performance.

Step 5: Training the Model

Training your model is one of the most resource-intensive steps in how to build a private LLM. Here’s a simplified process:

- Split your data: Divide your dataset into training, validation, and test sets. This division helps evaluate your model’s performance effectively.

- Configure hyperparameters: Set hyperparameters such as learning rate, batch size, and number of epochs. Experiment with different configurations to find the optimal settings for your model.

- Training process: Use your chosen framework to train the model on the training dataset. Monitor its performance on the validation set to prevent overfitting.

- Fine-tuning: Once the initial training is complete, fine-tune the model using specific data relevant to your objectives. This step enhances the model’s accuracy for your intended applications.

Step 6: Evaluate and Test

After training your private LLM, it’s essential to evaluate its performance. Use your test dataset to assess how well the model generalizes to new, unseen data. Key metrics to consider include:

- Accuracy

- Precision

- Recall

- F1 score

These metrics will help you determine if your model meets your objectives. If the performance is lacking, consider revisiting previous steps to make necessary adjustments, such as gathering more data or tweaking the model architecture.

Step 7: Deploying Your LLM

Once you are satisfied with your model’s performance, it’s time to deploy it. The deployment process can vary based on your specific needs and infrastructure. Some key considerations include:

- Hosting: Choose a suitable environment for hosting your model, whether it’s on-premises or in the cloud.

- API creation: Develop an API that allows users to interact with your model easily.

- Monitoring: Implement monitoring tools to track the model’s performance in real-time. This step helps identify any issues or areas for improvement.

Step 8: Continuous Improvement

The final step in how to build a private LLM is continuous improvement. As new data becomes available or as your objectives evolve, it’s essential to retrain and update your model regularly. This process will help maintain its effectiveness and relevance over time.

Conclusion

Building a private LLM is a complex but rewarding endeavor. By following the steps outlined in this article, you can create a customized language model tailored to your specific needs. From defining objectives and gathering data to training, evaluating, and deploying your model, each stage is crucial to achieving success. Remember, the journey doesn’t end with deployment; continuous improvement is key to keeping your LLM effective in an ever-changing landscape.

Leave a comment