Artificial Intelligence (AI) has become an integral part of modern technology, influencing various aspects of our lives from personalized recommendations to complex decision-making processes. As AI systems become increasingly sophisticated, ensuring their trustworthiness and responsible use is crucial. This is where AI TRiSM comes into play. In this article, we will explore what AI TRiSM is, why it is important, and how it helps in fostering responsible AI development.

What is AI TRiSM?

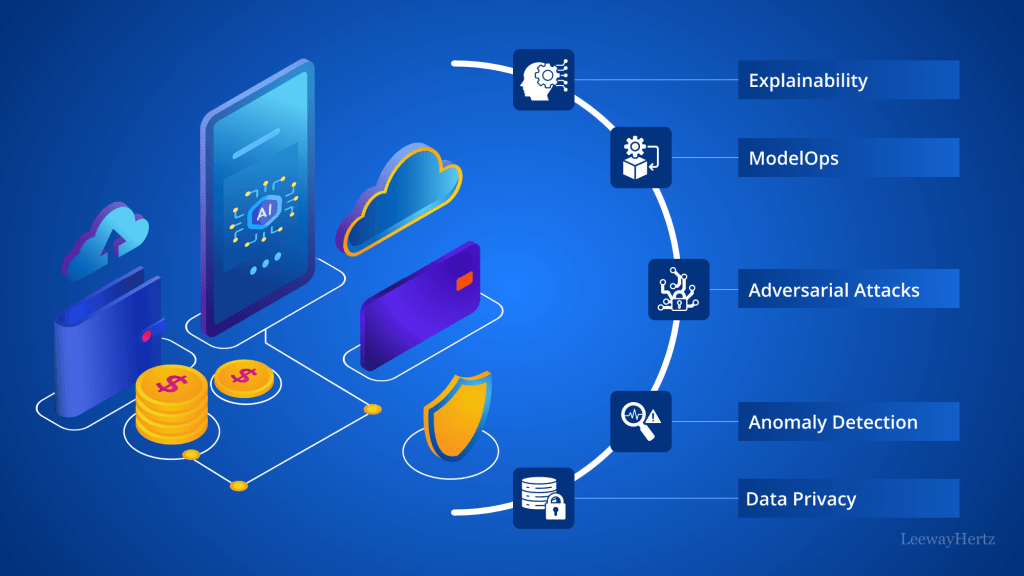

AI TRiSM, which stands for AI Trust, Risk, and Security Management, is a framework designed to address the challenges associated with the deployment and operation of AI systems. It encompasses various strategies and practices aimed at ensuring that AI technologies are developed and used in a manner that is transparent, accountable, and secure. The core principles of AI TRiSM include trustworthiness, risk management, and security, all of which are essential for maintaining the integrity and ethical use of AI.

The Importance of Trust in AI

Trust is a fundamental component of AI TRiSM. For AI systems to be effectively integrated into society, they must be reliable and trustworthy. This trust is built on several factors:

- Transparency: AI systems should operate in a transparent manner, meaning that their decision-making processes are understandable and accessible to users. Transparency helps users grasp how decisions are made, which is essential for building trust in AI technologies.

- Accountability: AI TRiSM emphasizes the importance of accountability. Developers and organizations must be responsible for the AI systems they create. This includes addressing any biases or errors that may arise and ensuring that the AI behaves in a manner consistent with ethical standards.

- Explainability: The ability to explain AI decisions is critical for trust. AI TRiSM promotes the development of systems that can provide clear explanations for their actions, helping users understand the rationale behind decisions.

Managing Risks Associated with AI

Risk management is another crucial aspect of AI TRiSM. AI systems can present various risks, including those related to safety, privacy, and ethical considerations. Effective risk management involves:

- Identifying Potential Risks: Organizations must conduct thorough risk assessments to identify potential issues that could arise from AI system deployment. This includes evaluating risks related to data privacy, security vulnerabilities, and unintended consequences of AI behavior.

- Implementing Mitigation Strategies: Once risks are identified, mitigation strategies should be developed and implemented. This could involve adopting robust security measures, establishing clear guidelines for data usage, and incorporating mechanisms to handle unexpected outcomes.

- Monitoring and Evaluation: Ongoing monitoring and evaluation are essential to ensure that AI systems continue to operate safely and effectively. Regular reviews help in detecting and addressing any emerging risks, ensuring that the AI remains aligned with ethical and operational standards.

Ensuring Security in AI Systems

Security is a pivotal element of AI TRiSM. As AI systems become more prevalent, ensuring their security is vital to protect against malicious attacks and data breaches. Key security considerations include:

- Data Protection: AI systems often handle vast amounts of sensitive data. Ensuring robust data protection measures, such as encryption and access controls, is essential for safeguarding user information and maintaining trust.

- Defensive Measures: Implementing defensive measures against potential cyberattacks is crucial. This includes using secure coding practices, conducting regular security audits, and staying informed about emerging threats.

- Incident Response: Having a well-defined incident response plan is necessary for addressing any security breaches that may occur. This plan should outline the steps to take in the event of a security incident, including communication strategies and remedial actions.

The Role of AI TRiSM in Ethical AI Development

AI TRiSM plays a significant role in promoting ethical AI development. By adhering to the principles of trust, risk management, and security, organizations can ensure that their AI systems are developed and deployed responsibly. This not only helps in building public trust but also in fostering a positive impact on society.

Ethical AI development involves considering the broader implications of AI technologies and striving to mitigate any negative effects. AI TRiSM supports this by encouraging practices that align with ethical standards and by providing a framework for addressing ethical dilemmas that may arise.

Conclusion

In summary, AI TRiSM is a comprehensive framework designed to ensure that AI systems are trustworthy, secure, and responsibly managed. By focusing on trust, risk management, and security, AI TRiSM helps organizations navigate the complexities of AI technology and promotes ethical practices in AI development. As AI continues to evolve, adhering to AI TRiSM principles will be crucial for maintaining the integrity and societal benefits of these powerful technologies. Embracing AI TRiSM is not just a best practice but a necessary step toward ensuring that AI serves humanity in a safe, ethical, and beneficial manner.

Leave a comment