In the evolving field of machine learning, one of the most significant challenges is the efficient adaptation of large models to new tasks or datasets. This is where Parameter-efficient Fine-tuning (PEFT) comes into play. PEFT is a technique that focuses on fine-tuning only a subset of parameters, allowing for resource-efficient adaptation of pre-trained models to new tasks. In this article, we will delve into the intricacies of PEFT, its benefits, and how it is transforming the landscape of machine learning.

Understanding Parameter-efficient Fine-tuning (PEFT)

Parameter efficient Fine tuning (PEFT) is an innovative approach in machine learning that addresses the need for efficient adaptation of large pre-trained models. Traditional fine-tuning methods involve updating all the parameters of a model, which can be computationally expensive and time-consuming. PEFT, on the other hand, optimizes only a small fraction of the model’s parameters, significantly reducing the computational overhead while maintaining or even improving performance.

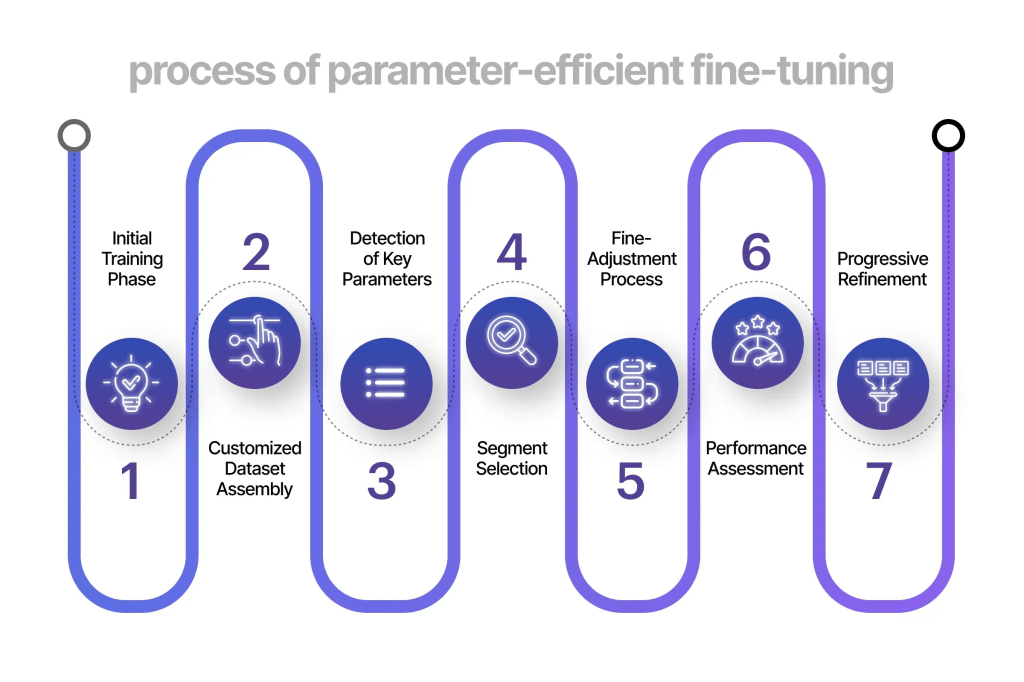

How PEFT Works

PEFT operates on the principle that not all parameters of a pre-trained model need to be adjusted to achieve effective fine-tuning. By identifying and updating only the most relevant parameters, PEFT achieves efficient adaptation with reduced computational resources. This selective updating process leverages techniques like low-rank adaptation, which focuses on fine-tuning parameters that contribute most significantly to the model’s performance on the new task.

Key Techniques in PEFT

- Low-rank Adaptation (LoRA):

LoRA is a popular method within PEFT that decomposes weight matrices into lower-rank matrices. By fine-tuning these lower-rank components, LoRA reduces the number of parameters that need to be updated, thereby achieving efficient fine-tuning. - Adapters:

Adapters are small neural network modules inserted into the pre-trained model. During fine-tuning, only these adapters are updated while the rest of the model’s parameters remain fixed. This method ensures that the core knowledge embedded in the pre-trained model is preserved while adapting it to new tasks. - BitFit:

BitFit involves fine-tuning only the bias terms in the model’s layers. By limiting updates to these bias terms, BitFit achieves parameter-efficient adaptation with minimal computational cost.

Benefits of Parameter-efficient Fine-tuning (PEFT)

Reduced Computational Cost

One of the most significant advantages of PEFT is the reduction in computational resources required for fine-tuning. By updating only a subset of parameters, PEFT minimizes the memory and processing power needed, making it accessible for users with limited computational resources.

Faster Fine-tuning

PEFT allows for quicker adaptation of models to new tasks. Since fewer parameters are updated, the fine-tuning process is faster compared to traditional methods. This is particularly beneficial in scenarios where time is a critical factor.

Preservation of Pre-trained Knowledge

PEFT ensures that the core knowledge embedded in the pre-trained model is preserved. By selectively updating parameters, PEFT maintains the original model’s performance on the tasks it was initially trained on while adapting it to new tasks.

Versatility Across Tasks

PEFT is highly versatile and can be applied across various tasks and domains. Whether it’s natural language processing, computer vision, or any other field, PEFT provides an efficient way to fine-tune models for specific applications.

Applications of Parameter-efficient Fine-tuning (PEFT)

Natural Language Processing (NLP)

In NLP, PEFT has shown remarkable success in tasks like text classification, sentiment analysis, and machine translation. By fine-tuning pre-trained language models like BERT or GPT using PEFT techniques, researchers can achieve state-of-the-art performance with reduced computational resources.

Computer Vision

In computer vision, PEFT is used to adapt models for tasks such as image classification, object detection, and segmentation. Techniques like LoRA and adapters enable efficient fine-tuning of models like ResNet or Vision Transformers, making them suitable for specific visual tasks.

Speech Recognition

PEFT is also applied in speech recognition, where adapting large models to recognize different accents, dialects, or languages can be computationally expensive. By using PEFT methods, researchers can fine-tune models for accurate speech recognition across diverse linguistic inputs.

Future Directions and Challenges

While PEFT offers numerous benefits, there are still challenges to be addressed. One of the primary challenges is identifying the optimal subset of parameters to fine-tune for different tasks. Additionally, ensuring that PEFT methods are robust across various model architectures and applications remains an area of active research.

The future of PEFT looks promising, with ongoing advancements aimed at improving its efficiency and effectiveness. Researchers are exploring hybrid approaches that combine different PEFT techniques to achieve even better performance. As PEFT continues to evolve, it is poised to become a standard practice in the fine-tuning of machine learning models.

Conclusion

Parameter-efficient Fine-tuning (PEFT) is revolutionizing the way we adapt pre-trained models to new tasks. By focusing on updating a small subset of parameters, PEFT provides a resource-efficient, fast, and versatile solution for fine-tuning. As the field of machine learning continues to advance, PEFT will undoubtedly play a crucial role in making sophisticated models more accessible and adaptable across various domains.

Leave a comment